Performance growth of digital microchips is flattening out and energy consumption is becoming a serious issue. This impasse has re-invigorated two lines of investigation that have been explored for decades without yet leading to decisive breakthroughs. The first is learning from the brain with its amazing intelligence-per-watt ratio. The second is the exploration of unconventional physical substrates and nonlinear phenomena.

Our conference has two aims: to explore technological options arising from novel computational architectures in unconventional substrates; and to work towards a general, rigorous theory of computing in non-digital, "brain-like" physical substrates.

This is a profoundly interdisciplinary enterprise. For the duration of three full days, we want to bring together researchers from neuroscience, mathematics, machine learning, computer engineering, physics and material sciences in a single-track "think tank" setting with eye-opening plenary and keynote lectures, and carefully selected oral presentations, broadened by comprehensive poster sessions.

The venue, the Castle of Herrenhausen, a heritage of the Kings of Hannover, was transformed into an award-winning center for scientific events. The Herrenhausen Gardens stretch across hectares of classical French gardening. This unique setting and generous break times will make it easy and natural to engage in a stimulating scientific exchange.

Our conference materializes as a Herrenhäuser Conference, a framework offered by the Volkswagen Foundation for hosting events with a particularly interdisciplinary and open-minded character.

The event is generously supported by the Volkswagen Foundation.

Oral presenters will receive travel grants.

Professor, Stanford University, USA. Some research themes: neuromorphic engineering, silicon retina, mixed analog-digital platforms, computing with unreliable nanoscale devices, novel computing paradigms.

https://web.stanford.edu/group/brainsinsilicon/boahen.html

Professor, University of Bath, UK, and Princeton University, USA. Some research themes: theories of intelligence, AI models of cognitive systems, evolutionary and learning dynamics, action selection, human culture, primate behaviour, robot and AI ethics.

http://www.cs.bath.ac.uk/~jjb/

Professor, University of Waterloo, Canada; Director, Centre for Theoretical Neuroscience, Waterloo; Research Chair in Theoretical Neuroscience, Royal Society of Canada. Some research themes: theoretical and computational neuroscience, large-scale simulations of cognitive neural systems, philosophy of mind and language, philosophy of science.

http://arts.uwaterloo.ca/~celiasmi/

Robert S. Pepper Distinguished Professor, UC Berkeley, USA. Some research themes: models of computation with time and concurrency, architectures for real-time and distributed computing, fault tolerance, sensor networks, blending computing with continuous dynamics and hybrid systems.

https://ptolemy.eecs.berkeley.edu/~ealProfessor, EPFL Lausanne, Switzerland. Some research themes: multi-mode fiber optics, optofluidics for energy conversion, nanoparticles for imaging and optofluidics, imaging in complex media, superresolution imaging. Director for the National Science Foundation Center for Neuromorphic Systems Engineering (1996–1999).

https://lo.epfl.ch/

Professor, Vrije Universiteit, Amsterdam, and Academic Medical Centre, Amsterdam; Director of the Netherlands Institute for Neuroscience, NL. Some research themes: visual perception, plasticity and memory in the visual system, behavioral paradigms in humans, computational neuroscience.

https://nin.nl/research/researchgroups/roelfsema-group/

Professor of Computer Science, University of York, UK. Some research themes: Non-standard computation (neural, biochemical, nanomolecular, evolutionary methods), non-von-Neumann architectures, quantum computing, artificial life, artificial immune systems.

https://www-users.cs.york.ac.uk/~susan/index.htm

Professor emeritus, former director of the Center for Interdisciplinary Research (ZiF), Bielefeld University, Germany. Some research themes: AI, cognitive learning research, natural language, expert and agent systems, virtual reality, multimodal interaction, affective computing, robotics, AI and robot ethics.

https://www.techfak.uni-bielefeld.de/~ipke/

Member of the resident faculty at the Santa Fe Institute, USA. Some research themes: nonequilibrium statistical physics to analyze the thermodynamics of computing systems; game theory for modeling humans operating in complex engineered systems; exploiting machine learning to improve optimization; and Monte Carlo methods.

https://www.santafe.edu/people/profile/david-wolpert

Professor, Institute of Cognitive Science, University Osnabrück; University Clinics Hamburg Eppendorf, Germany. Some research themes: embodied cognition, multimodal integration, sensorimotor interaction, computational cognition.

https://portal.ikw.uni-osnabrueck.de/~NBP/PeterKoenig.html

Over the past years, technical solutions to control systems or to data analysis became increasingly cognitive. These cognitive systems unite machine learning and artificial intelligence with human interaction or brain-like information processing. This allows humans to utilize complex machine learning approaches, to make better and more informed decisions. Furthermore, brain-computer interfaces enriching or substituting human senses and behavioral control have been realized. Neuro inspired machine learning tools such as deep learning revolutionized the learning of complex data structures and act as glue within this fast growing field. Cognitive applications are the driving force behind novel machine learning concepts and would significantly profit from fully neuromorphic computation. Suggestive topics:

An eminent obstacle opposing a fruitful transfer from neuroscience to computer science is a lack of unifying mathematical modeling tools. Different aspects of neural information processing are captured with incommensurable kinds of formal models. These tools are furthermore often highly specialized and understood by only a limited in-group of dedicated experts. Altogether novel mathematical frameworks may be needed which can capture self-organization and pattern formation in terms that connect to information processing. We solicit contributions from mathematical modelers on themes as the following indicative examples:

The human brain harbors billions of neurons connected via synapses to make a large-scale network. It is self-regulating, highly connected, multi-scale and consists of nonlinear devices. Computation is founded on an intrinsically parallel architecture. These features and their distinction from classical computation have stimulated the development of cognitive computing concepts. The objective of this session is to query substrate and device models and realization strategies which aim at design of "brain-like" computational systems in a wide sense. Suggestive topics of this session include

Computation is currently limited to digital computers implemented on a two-dimensional silicon substrate, wherein Boolean switching circuits are realized with transistor gates. Novel substrates – whether optical, biochemical, nano-physical or quantum effect based – will not, as a rule, admit clean transistor-like switching of binary signals. To compensate, they offer a rich spectrum of nonlinear phenomena at extremely low levels of energy consumption and extremely high speed, full 3D-parallelism and intrinsic adaptiveness. This session aims at displaying the wealth of potentially exploitable physical effects, and at hinting to device designs, in themes like

Biological brains thrive on the basis of a "hardware" which any computer engineer would perceive as doomed: unclocked, stochastic, heterogeneous, delay-ridden, degrading, super low-precision, with extreme device mismatches, unprogrammable, and on top of all, sometimes in need of sleep. Yet this (non-)machinery achieves information processing feats that dwarf supercomputers – and it does so in a superbly robust manner. Future artificial computing substrates optimized for energy efficiency will share many of the seemingly excruciating characteristics of neuronal wetware. This session will consider themes like

| Day 1: 18.12.2018Timeslot |

|---|

| Registration0815 - 0845 |

| Welcome address: VW-Foundation and Minister President of Lower Saxony0845 - 0900 |

| Session 1/1: AC - Cognitive applications of current systems0900 - 1030 |

| Keynote AC: Susan Stepney 0900 - 1000 |

| AC.C1: Yulia Sandamirskaya - Embodied Neuromorphic Cognition and Learning1000 - 1030 |

| Coffee break 1030 - 1100 |

| Session 1/2: AC - Cognitive applications of current systems1100 - 1200 |

| AC.C2: Ingo Fischer - Photonic Reservoir Computers as Decoders for Optical Communication Systems1100 - 1130 |

| AC.C3: Sohum Datta - Towards a General-purpose Hyper-Dimensional Processor1130 - 1200 |

| Lunch break1200 - 1330 |

| Session 2: TM - Theoretical concepts and mathematical foundations1330 - 1600 |

| Keynote TM: Edward A. Lee1330 - 1430 |

| TM.C1: Christian Tetzlaff - The interplay between diverse plasticity mechanisms regulates the information storage and computation in neural circuits1430 - 1500 |

| TM.C2: Marcus K. Benna - High-capacity, low-precision synapses for neuromorphic devices1500 - 1530 |

| TM.C3: Andrea Ceni - Interpreting RNN behaviour via excitable network attractors1530 - 1600 |

| Coffee discussion plus lecture1600 - 1800 |

| Lecture: Peter König - Moral Decisions of AI Systems1630 - 1730 |

| Banquet1800 - 1930 |

| Public opening lecture: Minister of Science and Culture of Lower Saxony and Ipke Wachsmuth - From Computers to Cognitive Computing1930 - 2100 |

| Day 2: 19.12.2018 |

|---|

| Session 3/1: NH - Neuromorphic hardware0900 - 1030 |

| Keynote NH: Kwabena Boahen 0900 - 1000 |

| NH.C1: Daniel Gauthier - FPGA-Based Autonomous Boolean Networks for Cognitive Computing1000 - 1030 |

| Coffee break 1030 - 1100 |

| Session 3/2: NH - Neuromorphic hardware1100 - 1200 |

| NH.C2: Louis Andreoli - Impact and mitigation of noise in analogue spatio-temporal neural network1100 - 1130 |

| NH.C3: Thomas Van Vaerenbergh - Solving NP-Hard Problems Using Electronic and Optical Recurrent Neural Networks1130 - 1200 |

| Lunch break 1200 - 1330 |

| Session 4: NS - Novel substrates1330 - 1600 |

| Keynote NS: Demetri Psaltis1330 - 1430 |

| NS.C1: Ripalta Stabile - Integrated Semiconductor Optical Amplifiers based Photonic Cross-Connect for Deep Neural Networks1430 - 1500 |

| NS.C2: Alice Mizrahi - Neural-like computing with stochastic nanomagnets1500 - 1530 |

| NS.C3: Julien Sylvestre - Blending Sensing and Computing in MEMS Devices1530 - 1600 |

| Coffee discussion 1600 - 1700 |

| Plenary I: David Wolpert - Fundamental Limits on the Thermodynamics of Circuits1700 - 1800 |

| Poster session I1700 - 1930 |

| Banquet and Plenary II: Joanna J. Bryson2000 |

| Day 3: 20.12.2018 |

|---|

| Session 5/1: GT - Guides from neuroscience for computing technologies0900 - 1030 |

| Keynote GT: Pieter Roelfsema0900 - 1000 |

| GT.C1: Andreas Herz - The many ways to read from grid cells1000 - 1030 |

| Coffee break1030 - 1100 |

| Session 5/2: GT - Guides from neuroscience for computing technologies1100 - 1200 |

| GT.C2: Aditya Gilra - Local learning of forward and inverse dynamics in spiking neural networks for non-linear motor control1100 - 1130 |

| GT.C3: Yves Frégnac - From Big-Data-Driven Simulation Of The Brain To The Myth Of Transhumanism1130 - 1200 |

| Lunch break 1200 - 1330 |

| Plenary III: Chris Eliasmith - Concerning Computing Brains1330 - 1430 |

| Coffee discussion 1430 - 1530 |

| Poster session II1430 - 1700 |

| Poster prize1730 - 1800 |

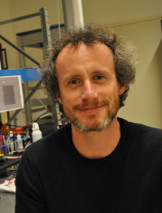

Dr. Daniel Brunner

FEMTO-ST Research Institute, Besançon, France.

http://members.femto-st.fr/daniel-brunner

Prof. Gordon Pipa

Institute of Cognitive Science, Osnabrück, Germany.

Prof. Herbert Jaeger

Jacobs University, Bremen, Germany.

https://www.jacobs-university.de/directory/hjaeger

Prof. Stuart Parkin

Max Planck Institute for Microstructure Physics, Germany

http://www.mpi-halle.mpg.de/NISE/director